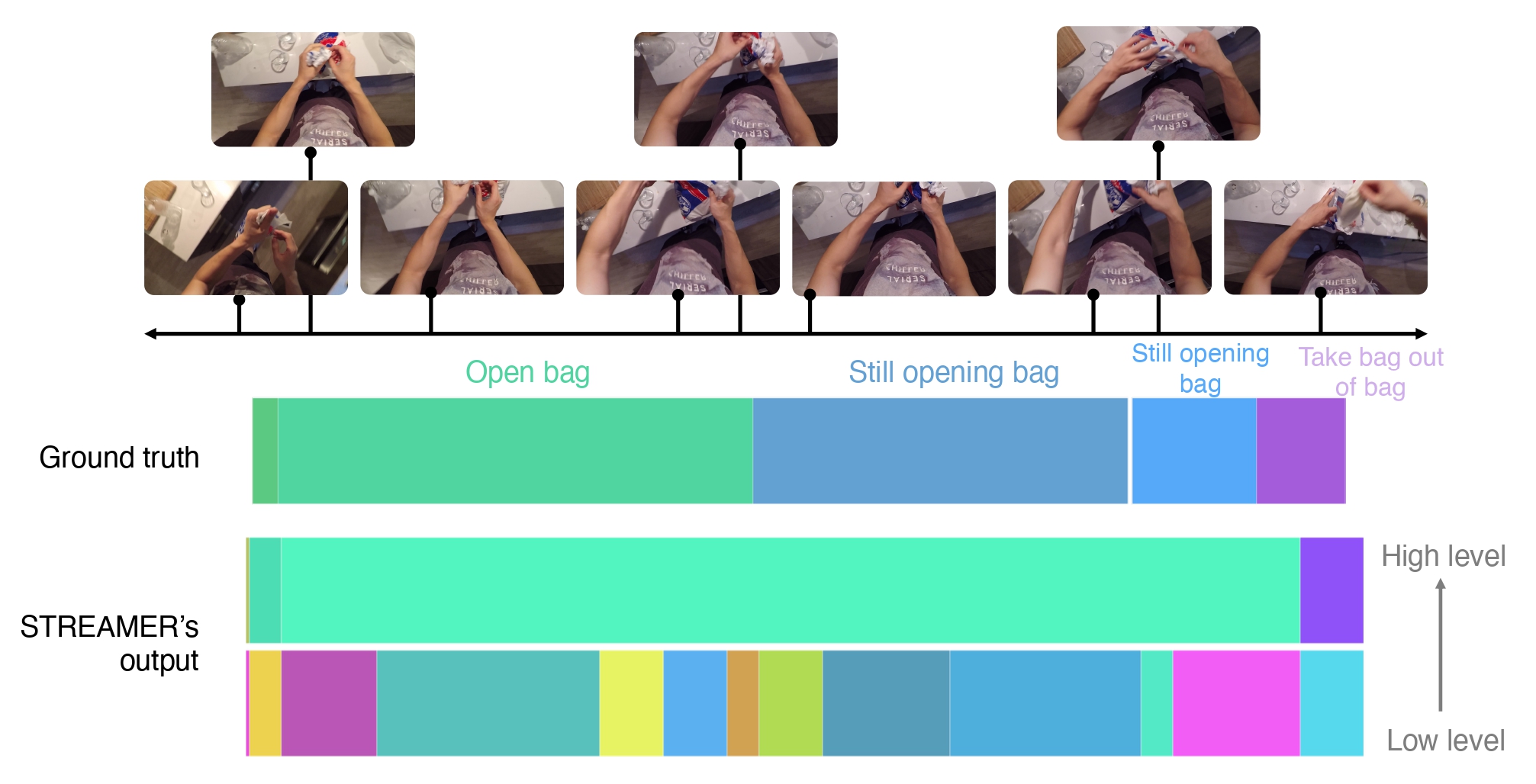

We present a novel self-supervised approach for hierarchical representation learning and segmentation of perceptual inputs in a streaming fashion. Our research addresses how to semantically group streaming inputs into chunks at various levels of a hierarchy while simultaneously learning, for each chunk, robust global representations throughout the domain. To achieve this, we propose STREAMER, an architecture that is trained layer-by-layer, adapting to the complexity of the input domain. In our approach, each layer is trained with two primary objectives: making accurate predictions into the future and providing necessary information to other levels for achieving the same objective. The event hierarchy is constructed by detecting prediction error peaks at different levels, where a detected boundary triggers a bottom-up information flow. At an event boundary, the encoded representation of inputs at one layer becomes the input to a higher-level layer. Additionally, we design a communication module that facilitates top-down and bottom-up exchange of information during the prediction process. Notably, our model is fully self-supervised and trained in a streaming manner, enabling a single pass on the training data. This means that the model encounters each input only once and does not store the data. We evaluate the performance of our model on the egocentric EPIC-KITCHENS dataset, specifically focusing on temporal event segmentation. Furthermore, we conduct event retrieval experiments using the learned representations to demonstrate the high quality of our video event representations.

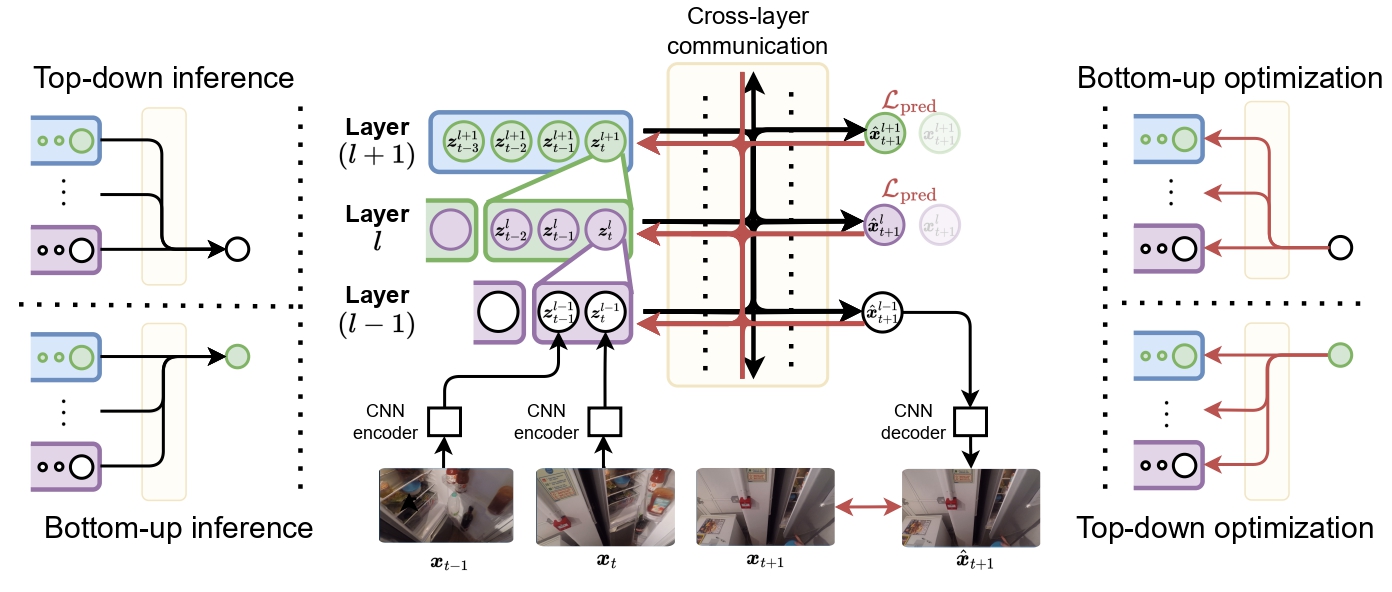

Overview of our full architecture. Given a stream of inputs at any layer, our model combines them and generates a bottleneck representation, which becomes the input to the level above it. The cross-layer communication could be broken down into top-down and bottom-up contextualized inference (left) and optimization (right).

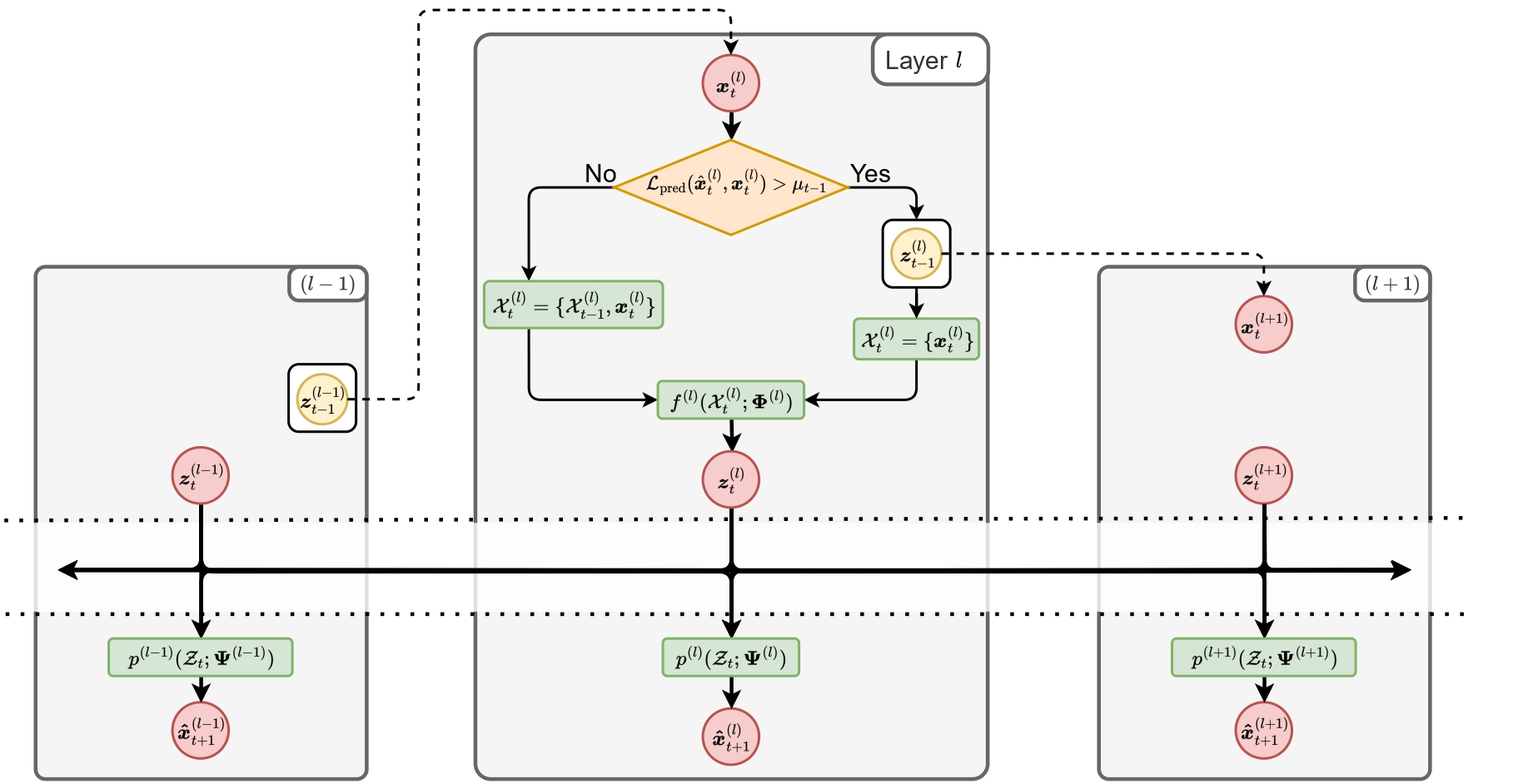

A diagram illustrating information flow across stacked identical layers. Each layer compares its prediction $\hat{\boldsymbol{x}}_t$ with the input $\boldsymbol{x}_t$ received from the layer below. If the prediction error $\mathcal{L}_\text{pred}$ is over a threshold $\mu_{t-1}$, the current representation $\boldsymbol{z}_{t-1}$ becomes the input to the layer above, and the working set is reset with $\boldsymbol{x}_t$; otherwise, $\boldsymbol{x}_t$ is appended to the working set $\mathcal{X}_t$

@inproceedings{mounir2023streamer,

title={STREAMER: Streaming Representation Learning and Event Segmentation in a Hierarchical Manner},

author={Mounir, Ramy and Vijayaraghavan, Sujal and Sarkar, Sudeep},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}

This research was supported by the US National Science Foundation Grants CNS 1513126 and IIS 1956050. The authors would like to thank Margrate Selwaness for her help with results visualizations.

© This webpage was in part inspired from this template.