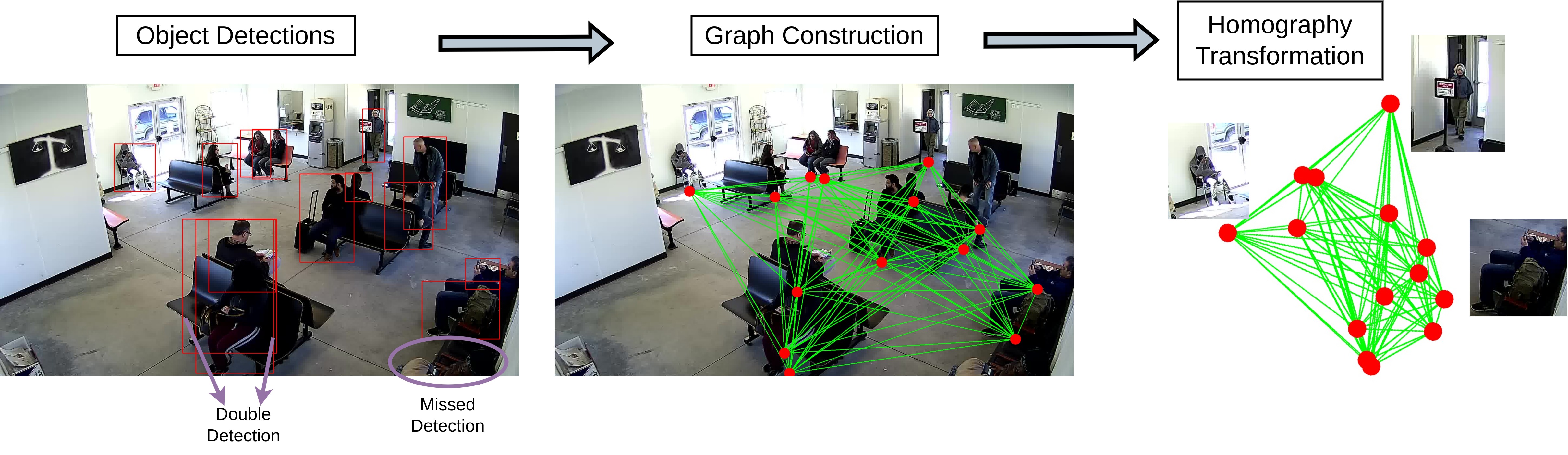

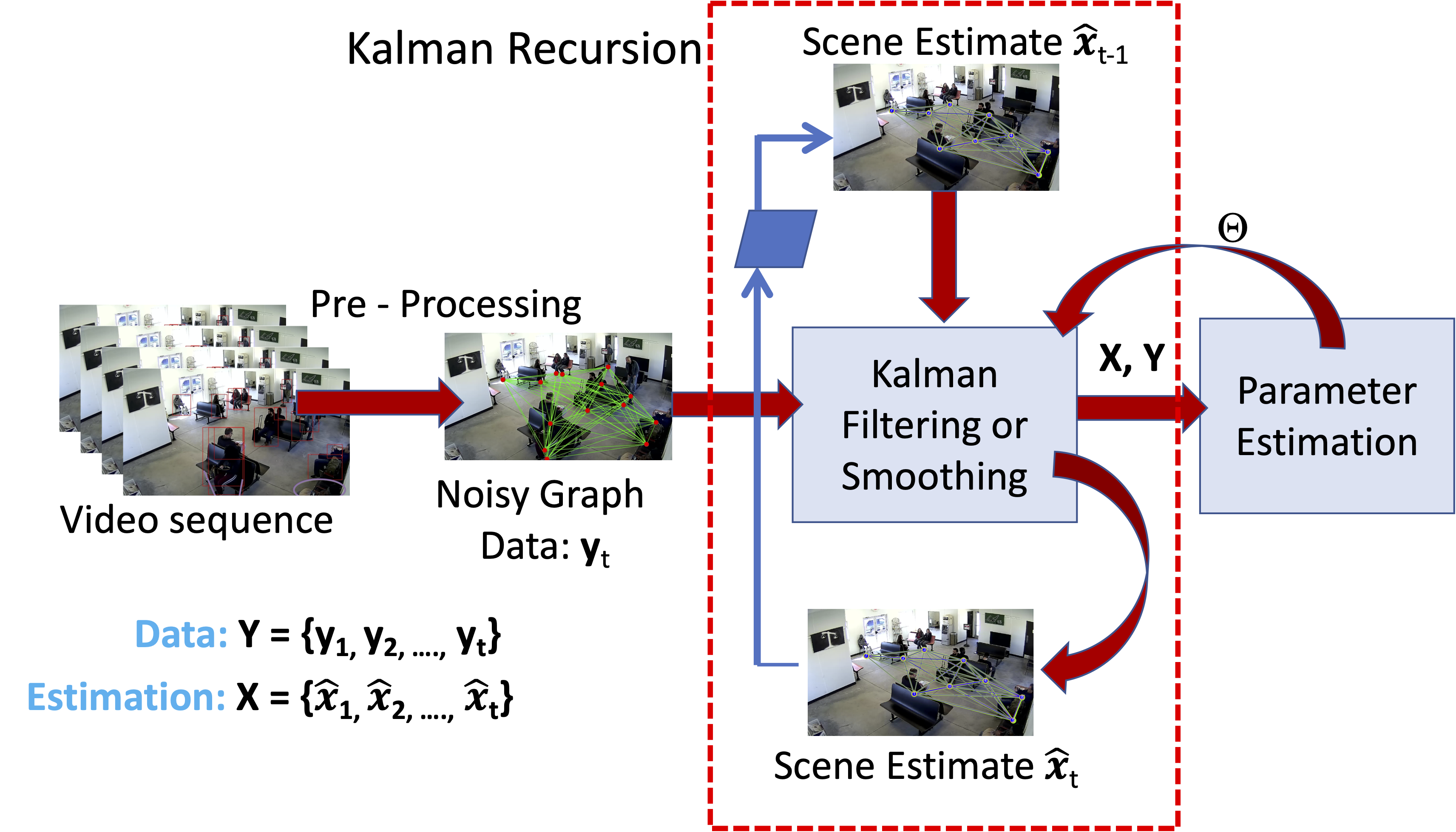

Graph-based representations are becoming increasingly popular for representing and analyzing video data, especially in object tracking and scene understanding applications. Accordingly, an essential tool in this approach is to generate statistical inferences for graphical time series associated with videos. This paper develops a Kalman-smoothing method for estimating graphs from noisy, cluttered, and incomplete data. The main challenge here is to find and preserve the registration of nodes (salient detected objects) across time frames when the data has noise and clutter due to false and missing nodes. First, we introduce a quotient-space representation of graphs that incorporates temporal registration of nodes, and we use that metric structure to impose a dynamical model on graph evolution. Then, we derive a Kalman smoother, adapted to the quotient space geometry, to estimate dense, smooth trajectories of graphs. We demonstrate this framework using simulated data and actual video graphs extracted from the Multiview Extended Video with Activities (MEVA) dataset. This framework successfully estimates graphs despite the noise, clutter, and missed detections.

@inproceedings{bal2022bayesian,

title={Bayesian Tracking of Video Graphs Using Joint Kalman Smoothing and Registration},

author={Bal, Aditi Basu and Mounir, Ramy and Aakur, Sathyanarayanan and Sarkar, Sudeep and Srivastava, Anuj},

booktitle={European Conference on Computer Vision},

year={2022}

}

This research was supported in part by the US National Science Foundation grants 1955154, IIS 2143150, IIS 1955230, CNS 1513126, and IIS 1956050.

© This webpage was in part inspired from this template.