Long-term Monitoring of Bird Flocks in the Wild

Kshitiz, Sonu Shreshtha, Ramy Mounir, Mayank Vatsa, Richa Singh, Saket Anand, Sudeep Sarkar, Severam Mali Parihar

Abstract

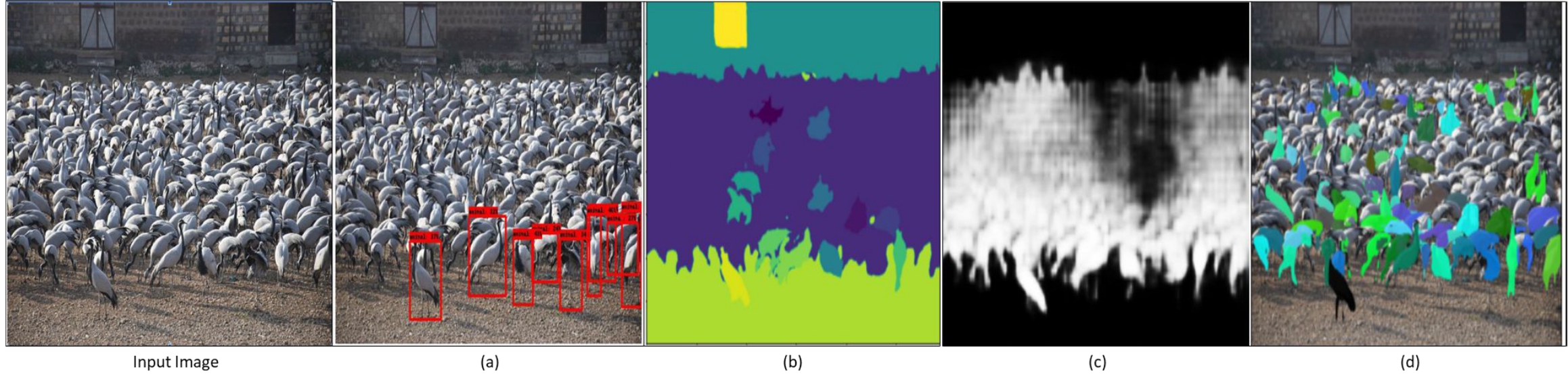

The work highlights the importance of monitoring wildlife for conservation and conflict management. It highlights the success of AI-based camera traps in planning conservation efforts. This project, part of the NSF-TIH Indo-US partnership, aims to analyze longer bird videos, addressing challenges in video analysis at feeding and nesting sites. The goal is to create datasets and tools for automated video analysis to understand bird behavior. A major achievement is a dataset of high-quality images of Demoiselle cranes, revealing issues with current methods in tasks like segmentation and detection. The ongoing project aims to expand the dataset and develop better video analytics for wildlife monitoring.

Citation

@misc{BirdMonitoring,

title = {Long-term Monitoring of Bird Flocks in the Wild},

author = {Kshitiz and Sonu Shreshtha and Ramy Mounir and Mayank Vatsa and Richa Singh and Saket Anand and Sudeep Sarkar and Severam Mali Parihar},

booktitle = {International Joint Conference on Artificial Intelligence},

year = {2023},

note = {IJCAI}

}