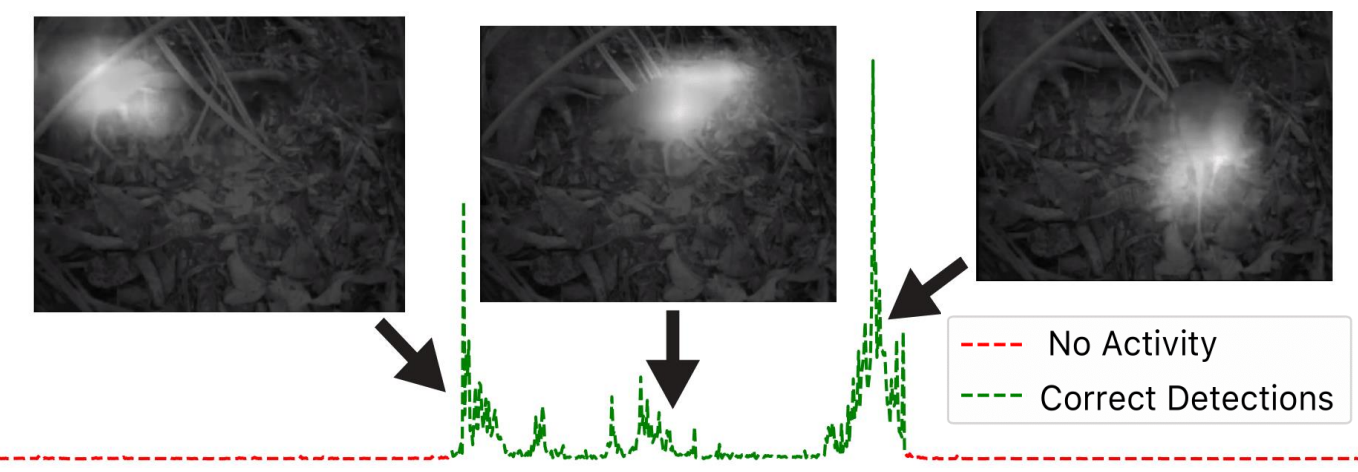

Using offline training schemes, researchers have tackled the event segmentation problem by providing full or weak-supervision through manually annotated labels or self-supervised epoch-based training. Most works consider videos that are at most 10's of minutes long. We present a self-supervised perceptual prediction framework capable of temporal event segmentation by building stable representations of objects over time and demonstrate it on long videos, spanning several days. The approach is deceptively simple but quite effective. We rely on predictions of high-level features computed by a standard deep learning backbone. For prediction, we use an LSTM, augmented with an attention mechanism, trained in a self-supervised manner using the prediction error. The self-learned attention maps effectively localize and track the event-related objects in each frame. The proposed approach does not require labels. It requires only a single pass through the video, with no separate training set. Given the lack of datasets of very long videos, we demonstrate our method on video from 10 days (254 hours) of continuous wildlife monitoring data that we had collected with required permissions. We find that the approach is robust to various environmental conditions such as day/night conditions, rain, sharp shadows, and windy conditions. For the task of temporally locating events, we had an 80% recall rate at 20% false-positive rate for frame-level segmentation. At the activity level, we had an 80% activity recall rate for one false activity detection every 50 minutes. We will make the dataset, which is the first of its kind, and the code available to the research community.

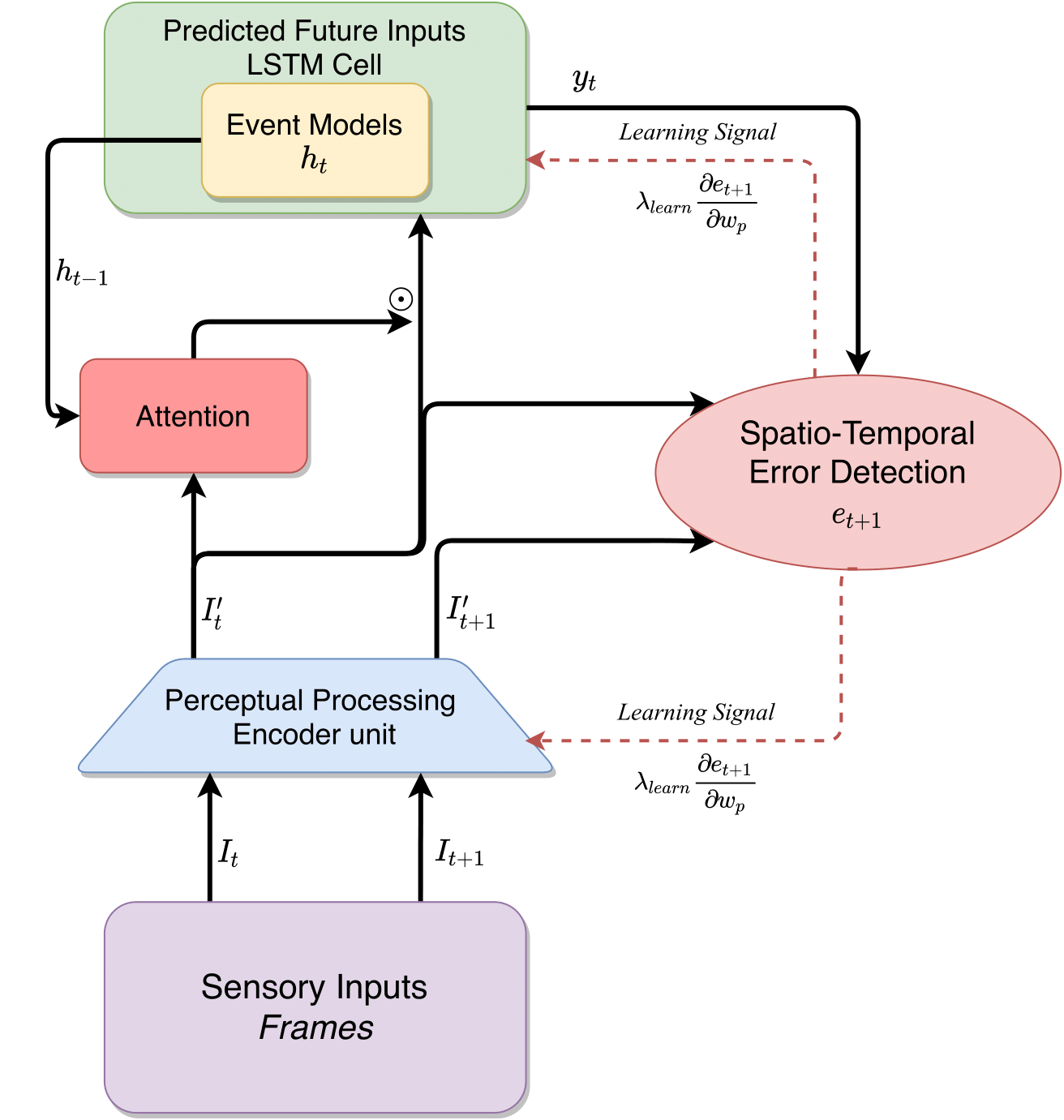

The architecture of the self-learning, perceptual prediction algorithm. Input frames from each time instant are encoded into high-level features using a deep-learning stack, followed by an attention overlay that is based on inputs from previous time instant, which is input to an LSTM. The training loss is composed based on the predicted and computed features from current and next frames.

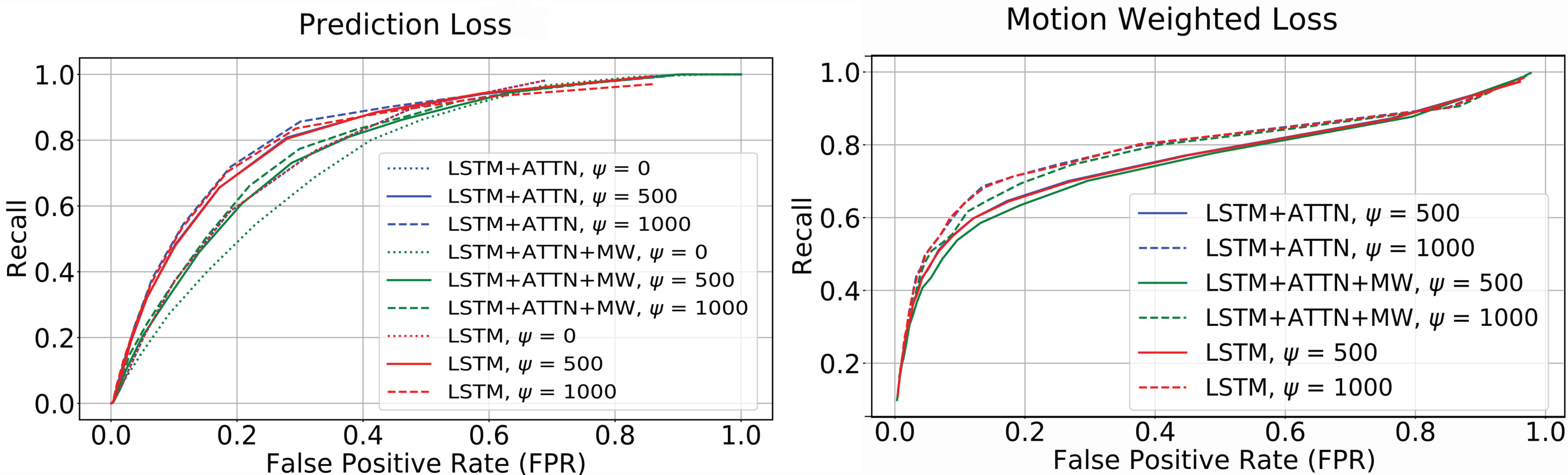

Frame-level event segmentation ROCs when activities are detected based on simple thresholding of the prediction and motion weighted loss signals. Plots are shown for different ablation studies.

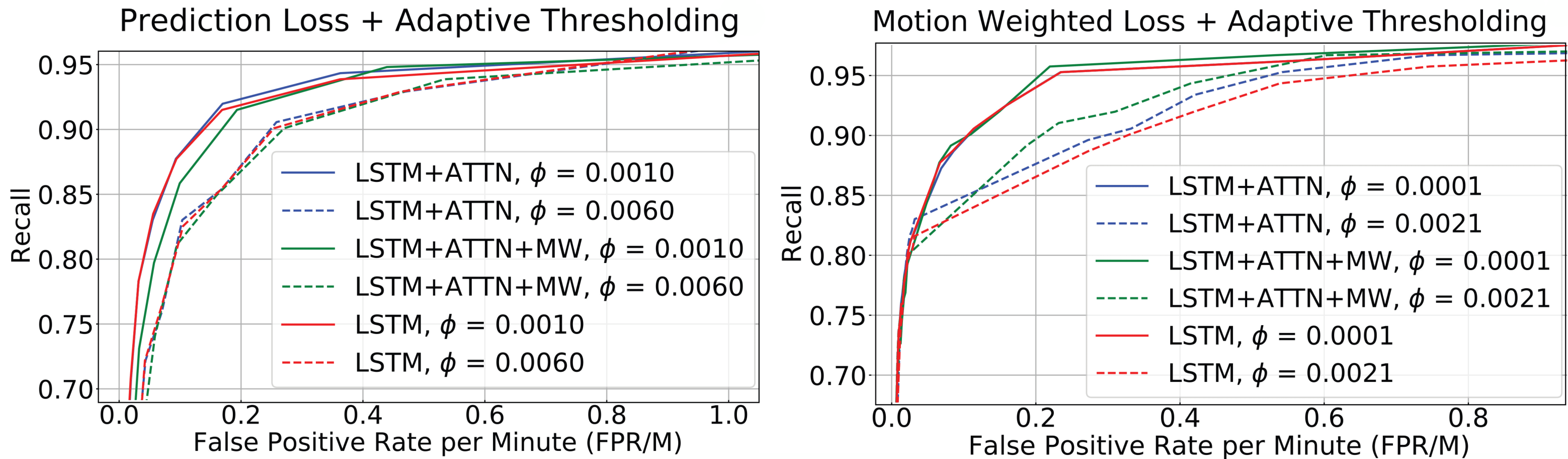

Activity-level event segmentation ROCs when activities are detected based on adaptive thresholding of the prediction and motion weighted loss signals. Plots are shown for different ablation studies.

Time Lapse Showing the Model Robust to Environmental Influences

Attention during Correct Detections

Attention during False Alarms

@inproceedings{mounir2022spatio,

title={Spatio-Temporal Event Segmentation for Wildlife Extended Videos},

author={Mounir, Ramy and Gula, Roman and Theuerkauf, J{\"o}rn and Sarkar, Sudeep},

booktitle={International Conference on Computer Vision and Image Processing},

pages={48--59},

year={2022},

organization={Springer}

}

This dataset was made possible through funding from the Polish National Science Centre (grant NCN 2011/01/M/NZ8/03344 and 2018/29/B/NZ8/02312). Province Sud (New Caledonia) issued all permits - from 2002 to 2020 - required for data collection. This research was supported in part by the US National Science Foundation grant IIS 1956050.

© This webpage was in part inspired from this template.